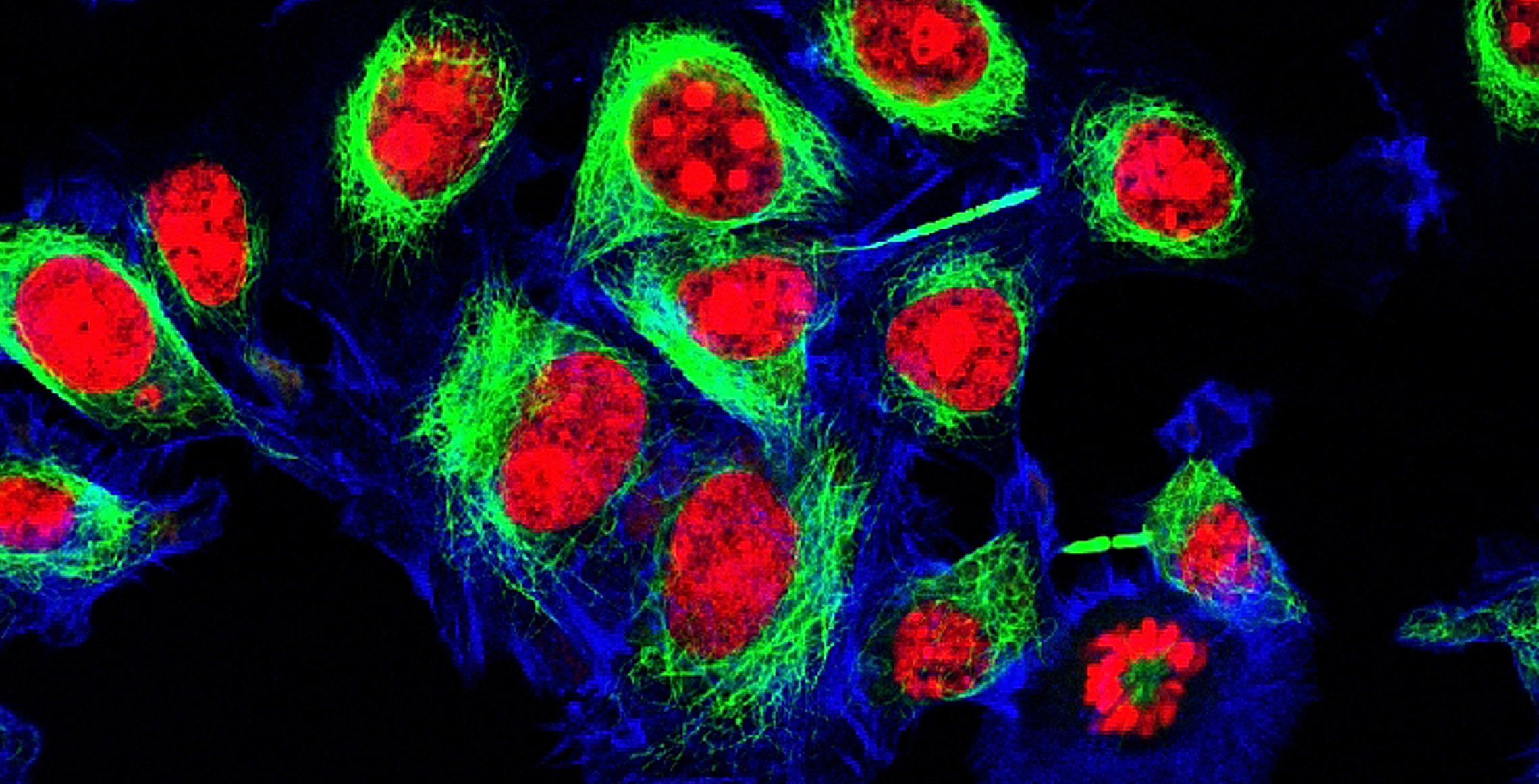

Leveraging AI in Cell Culture Analysis

Mar 22, 2023|Life Sciences, Technology

Mar 22, 2023|Life Sciences, Technology

March 9, 2023|Life Sciences, Materials Science, Transformation

Recently, I’ve been working on a new course offering in Enthought Academy titled Software Engineering for Scientists and Engineers course. I’ve focused on distilling the software engineering best practices that we use at Enthought with our clients, with the twist of “what parts are most useful for a scientist who writes software for R&D?” After all, we don’t want to simply replicate an academic 12-week course in software engineering; instead, we want to teach the subset of ideas that will make the most impact on scientific programming projects in the least amount of time. Unfortunately, I lost track of time and ran into my deadline for this blog. Panic time? Not this year.

When I was new to Python, I ran into a mysterious block of code that looked something like:

Digital transformation is reshaping industries, demanding scientific organizations to adapt and remain competitive in the rapidly changing landscape. Data lies at the heart of this transformation, providing the foundation for strategic decision-making and innovative breakthroughs. To achieve sustained success and outpace the competition, visionary leaders understand the need to not only keep pace with digital advancements but to stay ahead through continuous innovation and adaptation.

The answers I have given have always seemed more opinion than fact. Still, if someone asks me what language I recommend for new programmers or for a new project, unless there is a specific reason not to use Python, I recommend Python. And, if I were given a reason not to use Python, I would question that reason, just in case it was not really all that well thought out. So, why Python?

As a company that delivers Digital Transformation for Science, part of our job at Enthought is to understand the trends that will affect how our clients do their science. Below are three trends that caught our attention in 2022 that we predict will take center stage in 2023.

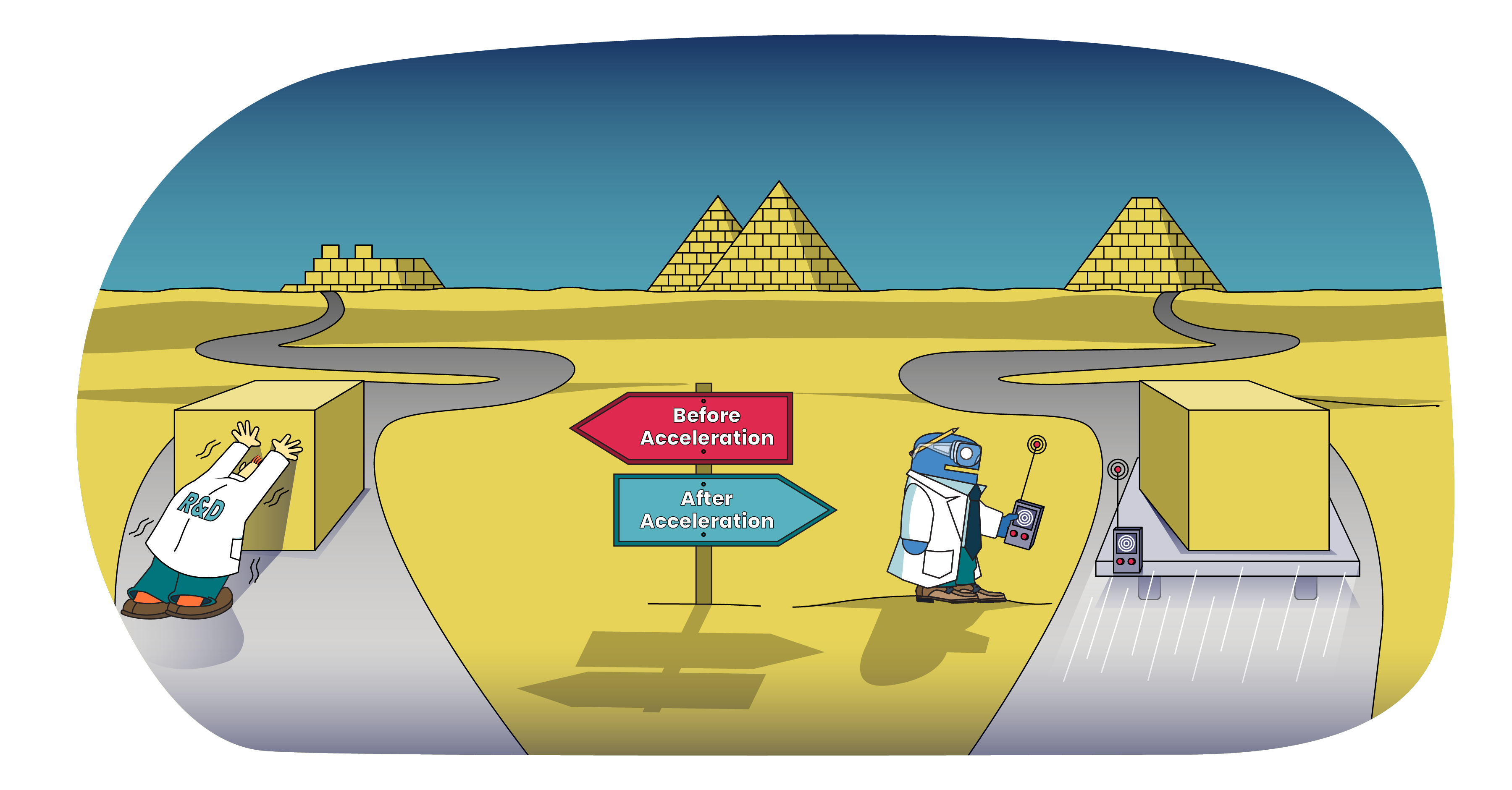

Classical mechanics teaches us that putting a body into motion requires applying force. The resulting acceleration will be the sum of the forces applied to the body, divided by the body’s mass: a = F/m. So, if we want to accelerate R&D, what are the forces we need to consider? And, what is the mass?

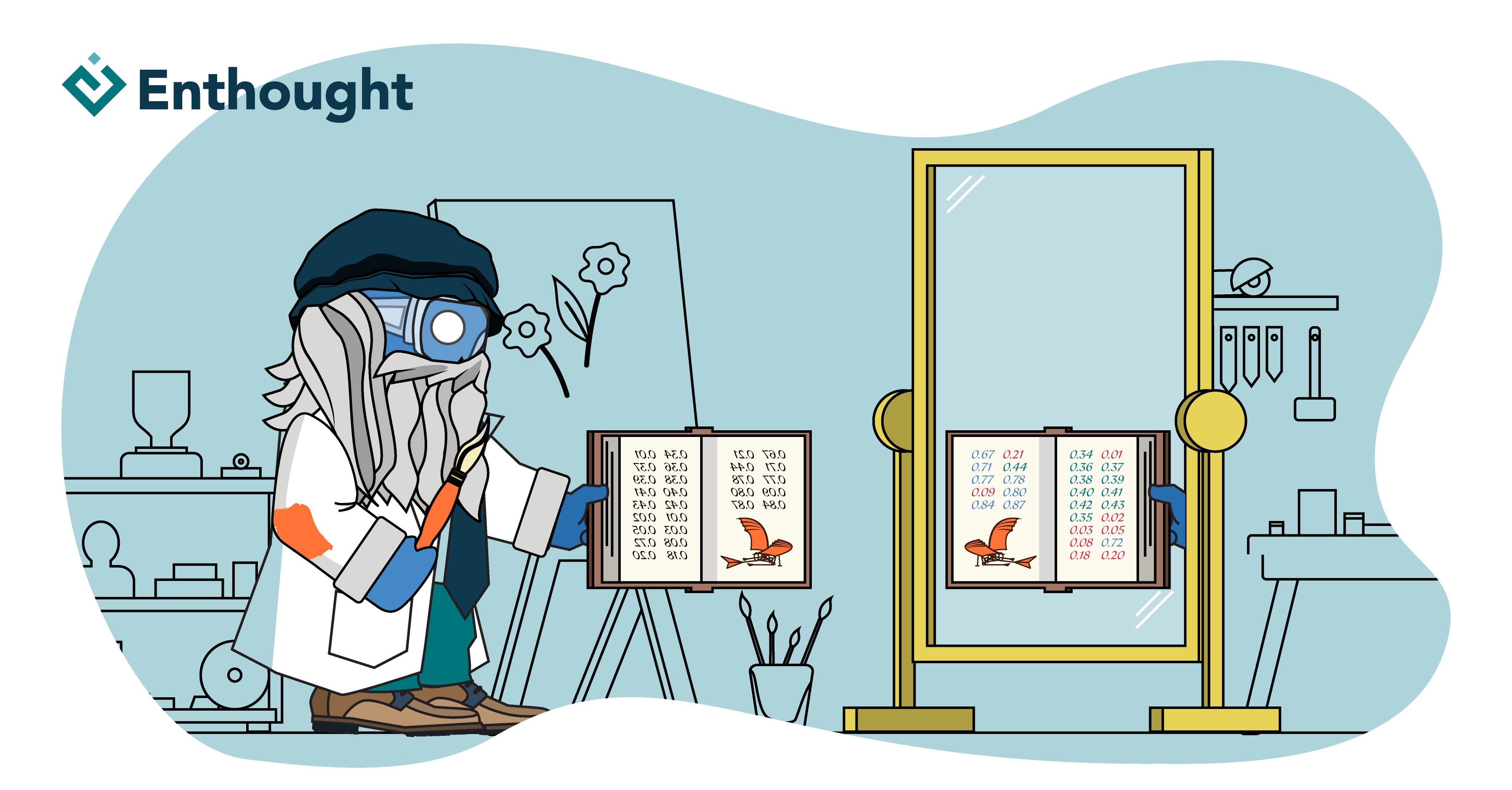

When thinking about Machine Learning it is easy to be model-centric and get caught up in the details of getting a new model up and running: preparing a dataset for machine learning, partitioning the training and test data, engineering features, selecting features, finding an appropriate metric, choosing a model, tuning the hyper-parameters. Being model-centric is reinforced by the fact that we don’t always have control of the data or how it was collected. In most cases, we are presented with a dataset collected by someone else and are asked what we can make of it. As a result, it is easy to just accept the data and over-fit your thinking about machine learning to the specifics of your modeling process and experience. Sometimes it is a good idea to step away from these details and remind yourself of the basic components of a model and its data, how they interact with each other, and how they evolve.

In the blog post Configuring a Neural Network Output Layer we highlighted how to correctly set up an output layer for deep learning models. Here, we discuss how to make sense of what a neural network actually returns from the output layers. If you are like me, you may have been surprised when you first encountered the output of a simple classification neural net.